Having participated in an API conference about two years ago, Ole Lensmar decided to share the ideas concerning API testing and debugging taken from his part. We found the article interesting, and that is why here it is at your disposal.

This blog-post is loosely based on a session on “API Testing and Debugging” that I was part of at a major API conference a couple of weeks ago. This time around, instead of telling the audience about new and old cool tools for API testing and quality out there, I wanted to take a step back and talk about testing and quality at a higher level and give insight into an important debate in the testing community, and how that applies to API Testing as well.

To do that – let’s start by considering the following:

- Given you are attending a major API conference

- When you are in a session about API Testing and Debugging

- Then you can hear the moderator introduce the speakers

As you might know – this is a specification-by-example scenario using something called gherkin to express a business requirement. Scenarios like these are often run through a framework like Cucumber that translates them into code and automatically executes and validates them. What’s specifically cool about this approach is that it strives to express high-level requirements in a way that both business and tech can use – so that written tests aren't just something available to developers. There are a huge number of well-established frameworks out there for verifying that software works as intended – ranging from the ultra-simple (JUnit) to the utterly complex (like Selenium).

In the testing world, this kind of testing is often referred to as “checking”– simply because these tests confirm an expected outcome of an invoked action. And although testers acknowledge the value of checks (both automated and manual), they argue that they are inherently insufficient to assess the quality of their target system. For example, what would checks like these say about the actual quality of the conference? What if we have a long list of scenarios like this; thousands of unit-tests, BDD scenarios, etc., and they all pass – does that automatically make the conference a great, high-quality conference?

No, of course not. The conference could still be a total failure because there is so much more to a high-quality conference experience than those measurable attributes or facets checked by our scripted tests above. To really get a good picture of the quality of this conference, you would perhaps do surveys and monitor social media to see what people say, and ultimately you would go around and listen to the corridor discussions and debates over lunch. That aspect of quality is linear impossible to automate and catch with a tool because it requires someone to apply his or her domain knowledge and creatively “probe” the target to be tested. The example of a conference is extreme in the sense that we would be testing in production (which we never ever do with APIs, right?) – but if we would have done a rehearsal, a “tester” would surely have done things like kill the internet connection, spill coffee, simulate a presenter getting sick in the last second, etc.

So how can we qualify the difference between testing and checking? I’ll refer you to the discussion at Develop Sense which covers this topic extensively - the most important takeaway for me being that both checking and testing are equally important and that the debate should not be testers vs. developers (which it often boils down to), but sapient vs. non-sapient testing activities, each of which can be performed by testers and developers.

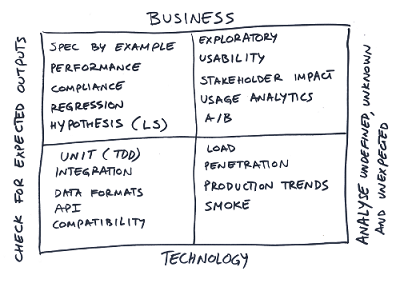

Lisa Crispin, who wrote a very influential book on Agile Testing a couple of years ago, created something she called the Agile Testing Quadrant to try to get to the bottom of how checking vs. testing activities could be applied to a project. Gojka Adcic just recently refined her original version, as follows:

To the left we have “checking” activities and to the right “testing.” The bottom is related to activities that validate technical aspects – the top faces the business. A majority of what is done during development is on the left hand of this quadrant - validating that a thing works as expected, preferably in an automated way. Mostly manual activities are on the right hand of the quadrant, focused on exploring, learning, challenging, and “breaking” stuff (like the conference tester example above).

These are often difficult to automate as they required a skilled mind.

So, how can this be applied to APIs? Well, the checking part should be straightforward; all those frameworks mentioned above apply equally well to the implementation of an API. “Real” testing, on the other hand, would cover things like assessing the whole usability experience (as mentioned in earlier blog posts); the sign-up process, documentation, and customer support (to name a few). To further challenge the actual API, a tester would perhaps do things like making API calls in the wrong order or with unexpected input – they’ll be ignoring HTTP cache-headers when they run their load-test – and try to send incorrect HTTP authentication headers to make sure your API gives the user graceful feedback.

Trying to find all the rough edges and unhandled unexpected usages of your API is extremely important because that is ultimately what your users will do when they start exploring it, both deliberately and by accident. And since the actual integration and usage of an API is usually automated, it lacks the wiggle-room of a Web UI where users can often “work around” minor errors themselves; this unanticipated usage needs to be considered in advance, tested and fixed by someone who has that mindset (analyzing and breaking stuff is cool!) – not someone who primarily wants to make sure things are working as they should.

Please don’t get me wrong here – isn’t it great that developers are testing at all? YES! It’s awesome! I applaud any developer out there writing unit tests, BDD features and scenarios, selenium scripts, you name it. This is why I find quality such an exciting field to be in; there is a huge ongoing shift in how testing and quality are achieved. To many developers it is nowadays just plain obvious that testing/checking is part of their work – they care about quality! I love them for it!

So, whatever tools you use for testing, checking, verifying, and asserting – please continue to do so. But also please realize that there is much more to achieving top-notch quality than automating assertions and scenario tests. So do yourself and your customers a great favor and embrace a tester mentality and approach to quality in your team – both you and your customers will cherish you for it.

You may find the original source here.